- The Prohuman

- Posts

- SLMs > LLMs

SLMs > LLMs

Plus: Kaggle AI agent course and more on why researchers think Singularity will be here in just 4 years

Hello, Prohuman

Today, we will talk about these stories:

Small Language Models (Research paper by AWS)

What Kaggle learned teaching agents at scale

Translation data hints at singularity

Someone just spent $236,000,000 on a painting. Here’s why it matters for your wallet.

The WSJ just reported the highest price ever paid for modern art at auction.

While equities, gold, bitcoin hover near highs, the art market is showing signs of early recovery after one of the longest downturns since the 1990s.

Here’s where it gets interesting→

Each investing environment is unique, but after the dot com crash, contemporary and post-war art grew ~24% a year for a decade, and after 2008, it grew ~11% annually for 12 years.*

Overall, the segment has outpaced the S&P by 15 percent with near-zero correlation from 1995 to 2025.

Now, Masterworks lets you invest in shares of artworks featuring legends like Banksy, Basquiat, and Picasso. Since 2019, investors have deployed $1.25 billion across 500+ artworks.

Masterworks has sold 25 works with net annualized returns like 14.6%, 17.6%, and 17.8%.

Shares can sell quickly, but my subscribers skip the waitlist:

*Per Masterworks data. Investing involves risk. Past performance not indicative of future returns. Important Reg A disclosures: masterworks.com/cd

AWS just embarrassed big AI models

Research paper

Most AI agent demos fall apart the moment they touch real tools.

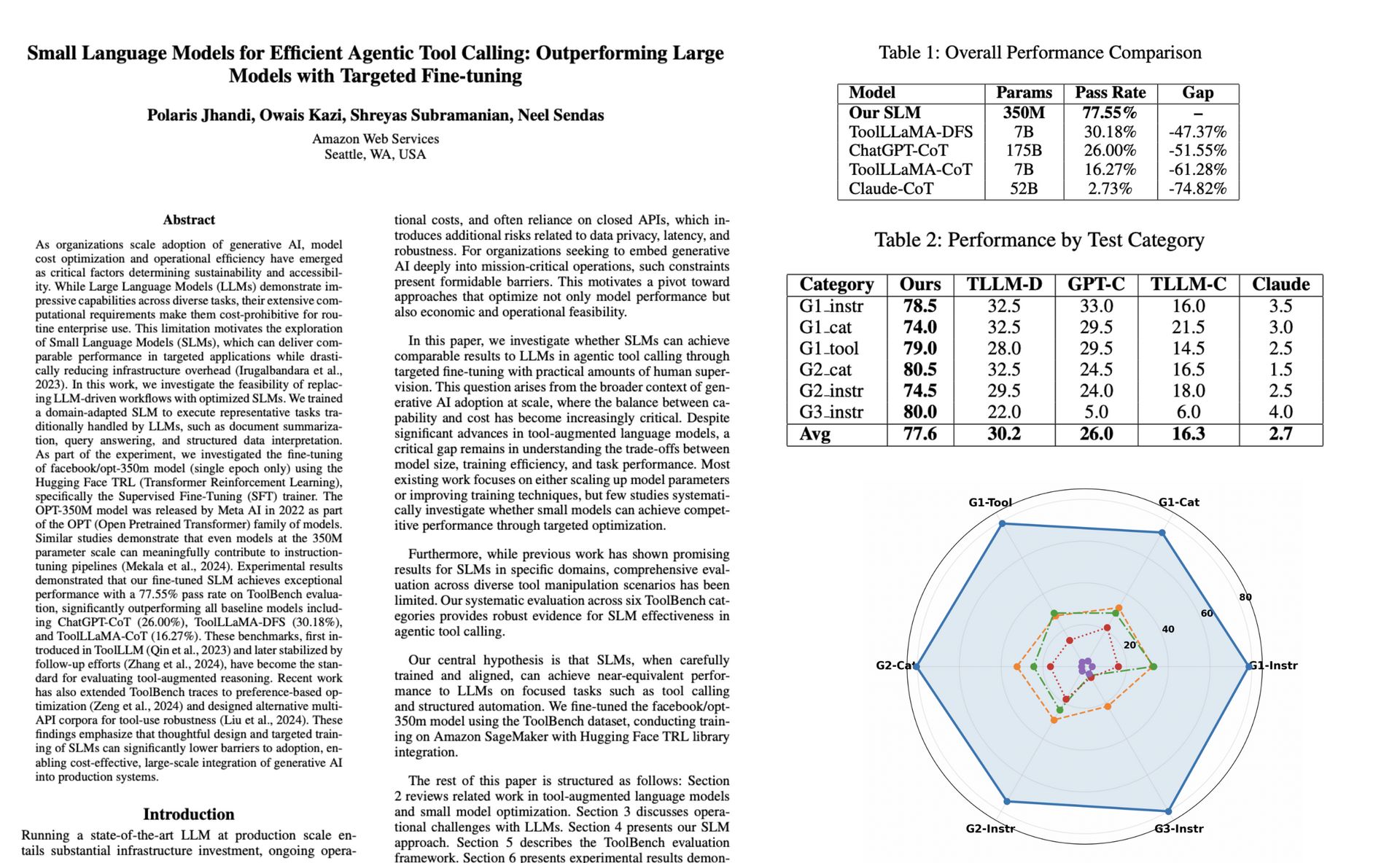

AWS researchers took Meta’s old OPT-350M model and fine-tuned it for tool use. On ToolBench, the 350M model hit a 77.55% success rate, while GPT-4 class models stalled around 26%. This test focused on structured API calls, not writing text, and the results landed quietly in a research paper most people skipped.

This doesn’t mean big models are useless, but it shows how poorly they behave when precision matters. When a model is trained to do everything, it wastes time being verbose or wrong instead of executing clean steps in a Thought-Action-Observation loop.

The smaller model works because it was trained like a tool, not a personality.

Costs drop fast when a 350M model replaces a 175B one, and latency shrinks to near zero on local hardware. Privacy improves too, since these agents can run on-device without sending data to the cloud at midnight while your laptop fan spins up.

If clicking a button still takes five seconds, what else have we overbuilt?

Read full paper here

Kaggle’s agent course hit 1.5 million learners

One and a half million people don’t show up unless something real is happening.

Kaggle and Google just wrapped a five-day AI Agents intensive that drew over 1.5 million learners worldwide. The course went past chatbot demos and into how agents actually reason, plan, call tools, and fail in production, with content from Google, Cohere, NVIDIA, and others. By the end, more than 11,000 capstone projects had been submitted.

What stands out is how practical this was. Two million views on whitepapers and 3.3 million on notebooks tells you developers weren’t there for vibes, they wanted concrete architectures and working code they could run after work, late at night, laptop open and Discord buzzing.

This also confirms something obvious but often ignored: interest in agents is no longer theoretical.

When 160,000 people actively discuss the same technical material in one week, patterns form fast. Best practices harden, weak ideas get exposed, and “agent” stops meaning a chat wrapper and starts meaning a system with constraints and evaluation.

If this many builders are already practicing, what happens when agents stop being a side project and become the default tool?

Check this here

A quiet metric says AGI is closer

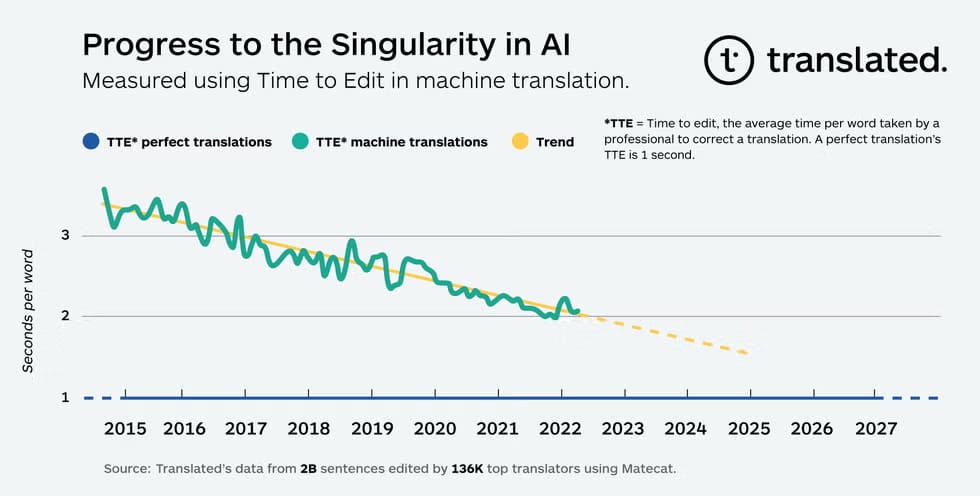

A translation company may have found the cleanest AGI signal yet.

Translated, a Rome-based firm, tracked how long professional editors take to fix AI translations versus human ones using a metric called Time to Edit. In 2015, editors needed about 3.5 seconds per word to correct machine output. Today it’s closer to 2 seconds, compared to roughly 1 second for human-to-human edits, based on more than 2 billion post-edits collected from 2014 to 2022.

This matters because it measures labor, not vibes or benchmarks tuned for demos. When real editors, late in the day with deadlines ticking, steadily spend less time fixing machines, that’s a concrete signal that capability is closing in on human performance.

It doesn’t prove AGI, but it does show sustained progress in one of the hardest human skills.

If speech translation reaches human parity within four to five years, entire classes of global work change fast. Support, education, diplomacy, and media all get reshaped whether researchers agree on definitions or not.

If this curve holds, what other human tasks are already quietly halfway gone?

Prohuman team

Covers emerging technology, AI models, and the people building the next layer of the internet. |  Founder |

Writes about how new interfaces, reasoning models, and automation are reshaping human work. |  Founder |

Free Guides

Explore our free guides and products to get into AI and master it.

All of them are free to access and would stay free for you.

Feeling generous?

You know someone who loves breakthroughs as much as you do.

Share The Prohuman it’s how smart people stay one update ahead.