- The Prohuman

- Posts

- How to train AI to make real decisions

How to train AI to make real decisions

A complete guide on how to reverse engineer any LLM to automate decision making and get best results

Hello, Prohuman

Today, we will talk about how you can reverse engineer any LLM for strategic decision making and more.

Complete guide on how to turn LLMs into strategic thinkers and decision makers

We reverse engineered how to get LLMs to make strategic decisions. Most people treat AI like a magic 8-ball. Ask a question, get an answer, done.

That's not decision-making. That's guessing with extra steps.

Here's what actually works:

Every expert know this that LLMs default to pattern matching, not strategic thinking.

They'll give you the most common answer, not the best one.

Strategic decisions require:

Understanding tradeoffs

Evaluating multiple futures

Weighing second-order effects

Most prompts skip all of this.

The breakthrough came from forcing models to separate analysis from conclusion.

Here's the base framework:

Prompt:

You are making a strategic decision about [DECISION].

Step 1: List all possible options (minimum 5)

Step 2: For each option, identify 3 second-order consequences

Step 3: Rate each option on: speed, cost, risk, reversibility (1-10 scale)

Step 4: Identify which constraints matter most in this context

Step 5: Make your recommendation and explain the tradeoff you're acceptingExample: Should we rebuild our codebase or refactor incrementally?

My prompt:

Strategic decision: Rebuild vs refactor a 200k line Python monolith.

Context:

- 6 engineers

- Paying customers

- Tech debt causing 2-3 outages/month

- Competitors shipping faster

Step 1: List all realistic options

Step 2: For each, map consequences at 3 months, 6 months, 12 months

Step 3: Score on: team morale, revenue risk, technical debt reduction, velocity

Step 4: What kills us faster - more outages or slower features?

Step 5: Recommendation with explicit tradeoffs

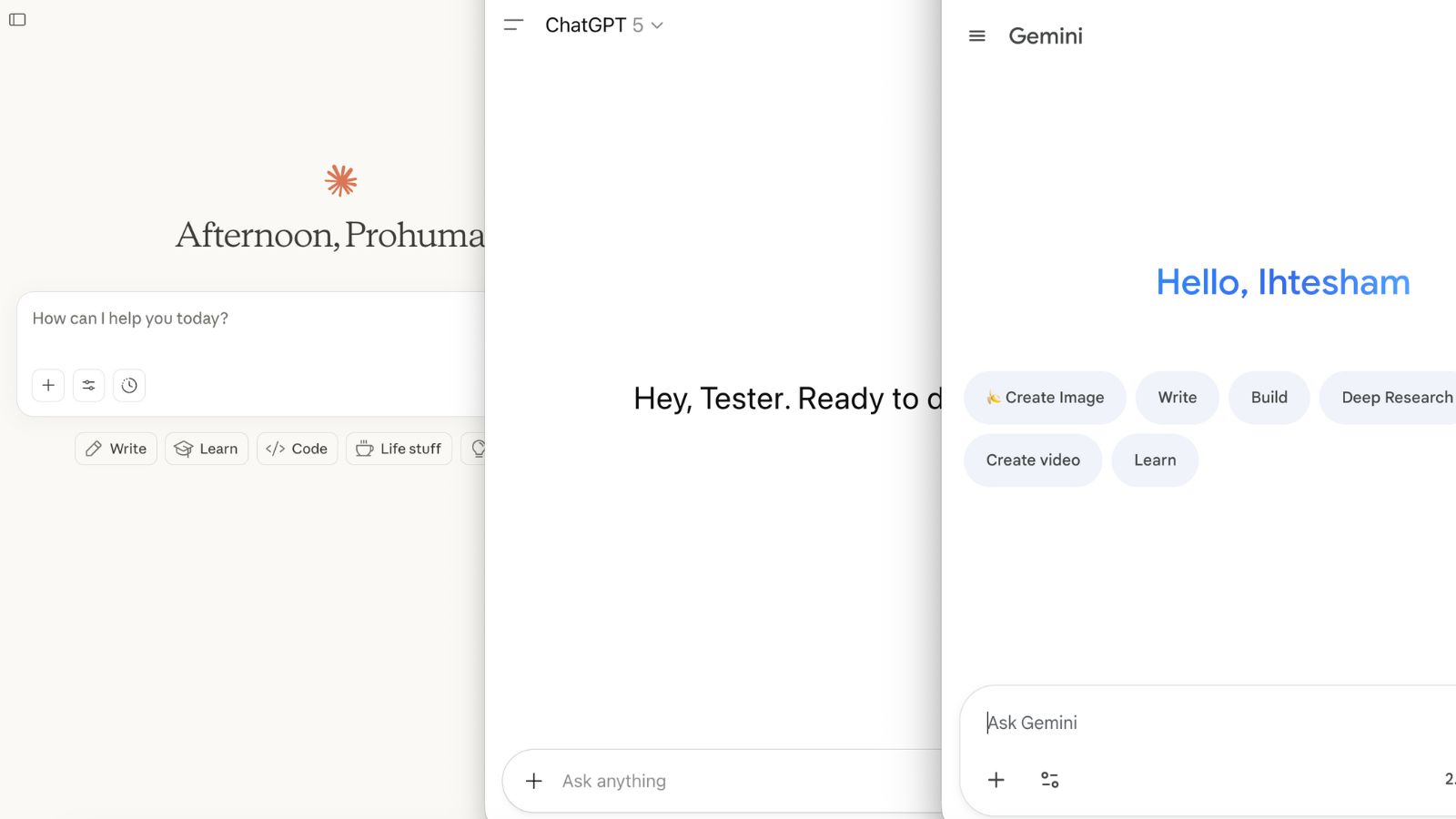

First half of the results provided by the LLM

The model's output was completely different from the typical "it depends" garbage. It identified 7 options I hadn't considered, including hybrid approaches.

Mapped out that a full rebuild would tank velocity for 4-6 months, but incremental refactoring would let outages continue for 8+ months.

Recommended a "strangler fig" approach - new features in new architecture, gradual migration.

The reasoning was airtight.

Key insight: strategic decisions need constraints.

Without them, models optimize for imaginary perfect scenarios.

Add these to every strategic prompt:

Constraints:

- Timeline: [X weeks/months]

- Budget: [$ or team size]

- Risk tolerance: [scale 1-10]

- What we CANNOT do: [explicit list]

- What failure looks like: [specific bad outcome]Models suddenly stop suggesting "hire 10 more engineers" when you tell them you have 3 months and $0.

Another example: Market entry strategy.

Prompt:

Strategic decision: Which market should we enter first?

Options: Enterprise SaaS, SMB self-serve, Developer tools

Context:

- $2M funding

- 8-person team

- No sales team yet

- Product is 70% built

For each option, model:

1. Time to first revenue

2. CAC and sales cycle length

3. Competitive intensity

4. Pivot difficulty if wrong

Then rank by: probability of survival in 18 months.

Constraints:

- Must reach $50k MRR in 12 months

- Cannot hire sales before $20k MRR

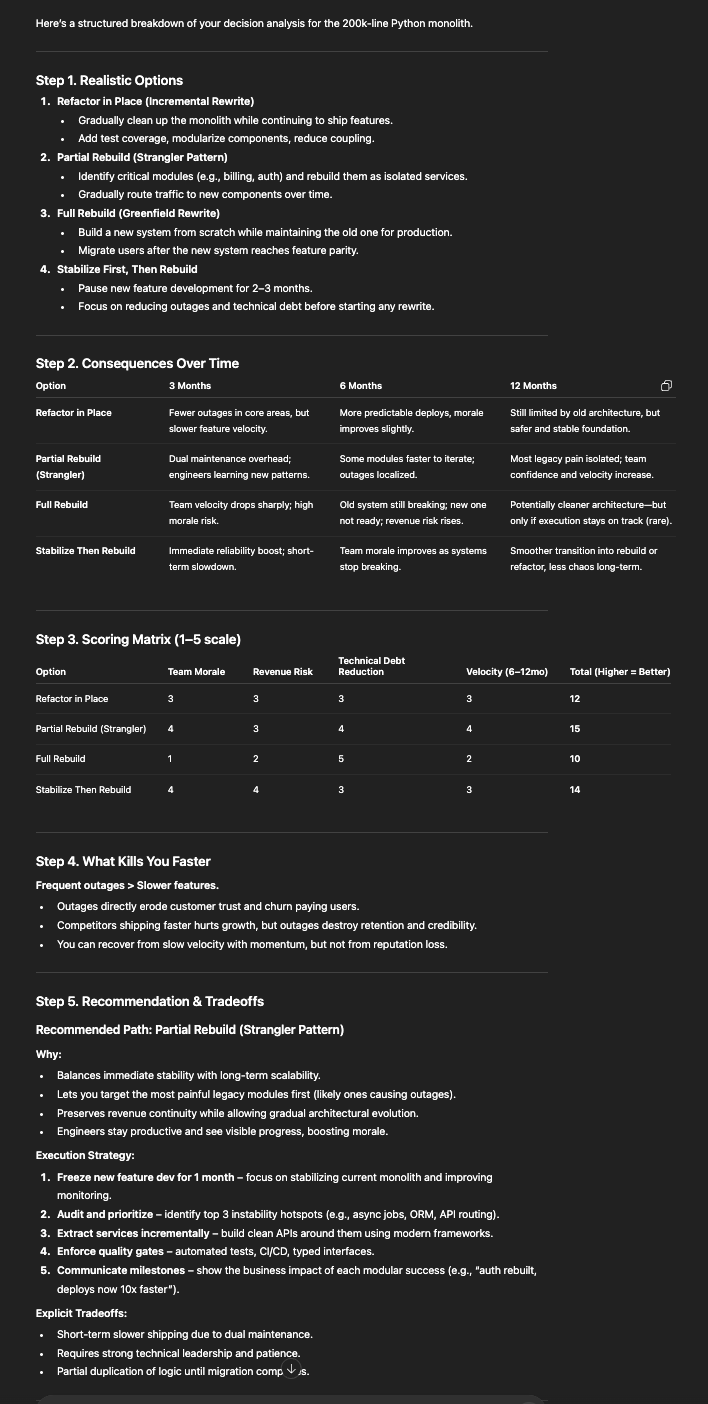

- Technical founder, not sales backgroundThe model identified that developer tools had fastest time-to-revenue but lowest ceiling. Enterprise had highest revenue potential but would burn runway before closing deals. Recommended SMB self-serve with a specific PLG motion, THEN upsell to enterprise.

The output (its the first part)

It even suggested which features to cut to ship faster.

This is strategic thinking.

The pattern that works across all strategic decisions:

1. Force explicit option generation

"List 5-7 distinct approaches, not variations of the same idea"

2. Demand consequence mapping

"For each option, what happens at T+3mo, T+6mo, T+12mo"

3. Make tradeoffs visible

"What are you sacrificing if you choose this?"

4. Add real constraints

"Here's what we can't do and what kills us"

5. Require decisiveness

"Pick one. Explain which risk you're accepting."

Advanced technique: Multi-agent debate.

Run the same decision through 3 different "advisors" with different risk profiles.

Prompt structure:

Agent 1 (Conservative): Optimize for not losing

Agent 2 (Aggressive): Optimize for maximum upside

Agent 3 (Pragmatic): Optimize for execution speed

Each agent:

- Recommends their approach

- Critiques the other approaches

- Identifies blindspots in their own reasoning

Then synthesize into a final recommendation.

Real example I used last week: Pricing strategy.

Prompt:

Three advisors debate our pricing strategy:

Current: $49/mo flat rate

Revenue: $80k MRR, 1,634 customers

Churn: 7% monthly

Conservative advisor: Proposes pricing changes that minimize churn risk

Aggressive advisor: Proposes pricing that maximizes revenue in 6 months

Pragmatic advisor: Proposes what we can actually implement this quarter

Each advisor must:

- Propose specific pricing tiers

- Model impact on MRR and churn

- Identify what could go catastrophically wrong

- Explain why the other approaches fail

Then: Synthesis recommendation with implementation steps.The output was remarkable.

Conservative wanted minor increases with grandfathering.

Aggressive wanted 3-tier value-based pricing with 60% price increase at top.

Pragmatic wanted usage-based pricing with simple implementation.

The synthesis identified that we could implement usage-based quickly, test with 10% of new signups, and iterate based on data.

Actual strategic thinking with risk management built in.

LLMs are trained on strategic documents - business cases, research papers, policy debates.

But they need structure to access that reasoning mode. Generic prompts trigger generic pattern matching.

Structured strategic prompts trigger strategic reasoning. The model already knows how to think strategically.

You just have to ask correctly.

Template you can copy:

Strategic Decision Framework:

Decision: [Clear, specific decision]

Context (be specific):

- Current state: [metrics, situation]

- Resources: [team, budget, time]

- Stakes: [what happens if wrong]

Generate Options:

- List 5-7 distinct approaches

- No variations, only fundamentally different strategies

Consequence Mapping:

For each option, analyze:

- 3-month outcome

- 6-month outcome

- 12-month outcome

- What breaks if this goes wrong

Scoring Matrix:

Rate each option (1-10):

- Speed to impact

- Resource efficiency

- Risk level

- Reversibility

- Strategic alignment

Constraints (hard limits):

- Timeline: [X]

- Budget: [Y]

- Cannot do: [Z]

- Failure mode: [What kills us]

Decision:

- Chosen approach

- Tradeoff being accepted

- Leading indicators to watch

- Decision reversal triggerOne more advanced technique: Red team your own decision.

After getting a recommendation, run this:

Prompt:

You just recommended [DECISION].

Now play devil's advocate:

- What assumptions could be wrong?

- What evidence contradicts this approach?

- If this fails in 6 months, what will the post-mortem say?

- What would a competitor do differently?

- What are we not seeing because of cognitive biases?

Then: Should we change the decision? Or accept these risks?The meta-lesson:

Strategic thinking isn't about intelligence.

It's about process.

Force the model through a strategic process, you get strategic output. Skip the process, you get pattern-matched vibes.

Most people are using AI like a search engine when they should be using it like a strategic advisor with a structured framework.

LLMs can make genuinely strategic decisions.

But only if you:

Force explicit option generation

Demand consequence mapping

Make tradeoffs visible

Add real constraints

Require decisiveness

The models already have the capability. Most prompts just never activate it.

Prohuman team

Covers emerging technology, AI models, and the people building the next layer of the internet. |  Founder |

Writes about how new interfaces, reasoning models, and automation are reshaping human work. |  Founder |

Free Guides

Explore our free guides and products to get into AI and master it.

All of them are free to access and would stay free for you.

Feeling generous?

You know someone who loves breakthroughs as much as you do.

Share The Prohuman it’s how smart people stay one update ahead.