- The Prohuman

- Posts

- Google’s AI is breaking recipe sites

Google’s AI is breaking recipe sites

Plus: Marketing’s AI copyright mess

Hello, Prohuman

Today, we will talk about these stories:

The quiet collapse of recipe search

Why AI budgets are backwards

Disney shows how careful AI gets

The Future of Shopping? AI + Actual Humans.

AI has changed how consumers shop by speeding up research. But one thing hasn’t changed: shoppers still trust people more than AI.

Levanta’s new Affiliate 3.0 Consumer Report reveals a major shift in how shoppers blend AI tools with human influence. Consumers use AI to explore options, but when it comes time to buy, they still turn to creators, communities, and real experiences to validate their decisions.

The data shows:

Only 10% of shoppers buy through AI-recommended links

87% discover products through creators, blogs, or communities they trust

Human sources like reviews and creators rank higher in trust than AI recommendations

The most effective brands are combining AI discovery with authentic human influence to drive measurable conversions.

Affiliate marketing isn’t being replaced by AI, it’s being amplified by it.

Why food blogs are losing traffic?

Image Credits: Google

Google’s AI is now cooking dinner for people.

Since March, Google’s AI Mode has been generating recipes directly in search results, often by stitching together instructions from 8 to 10 different creators into one simplified version. Recipe writers report steep traffic drops, including one site that lost 80% of visits in two years, right as the holiday season should be paying the bills.

I think this lands harder than past search changes because it breaks trust, not just traffic. When a recipe turns into a thin list of steps stripped of judgment, testing, and context, readers stop learning and creators lose the reason to show up.

Some bloggers are shifting to video or subscriptions, but most cannot afford to disappear from Google entirely. Cookbooks are selling better this year, yet even those are being scraped and resold by bots.

At some point, standing in a kitchen at 6pm, people will notice when the food keeps coming out wrong.

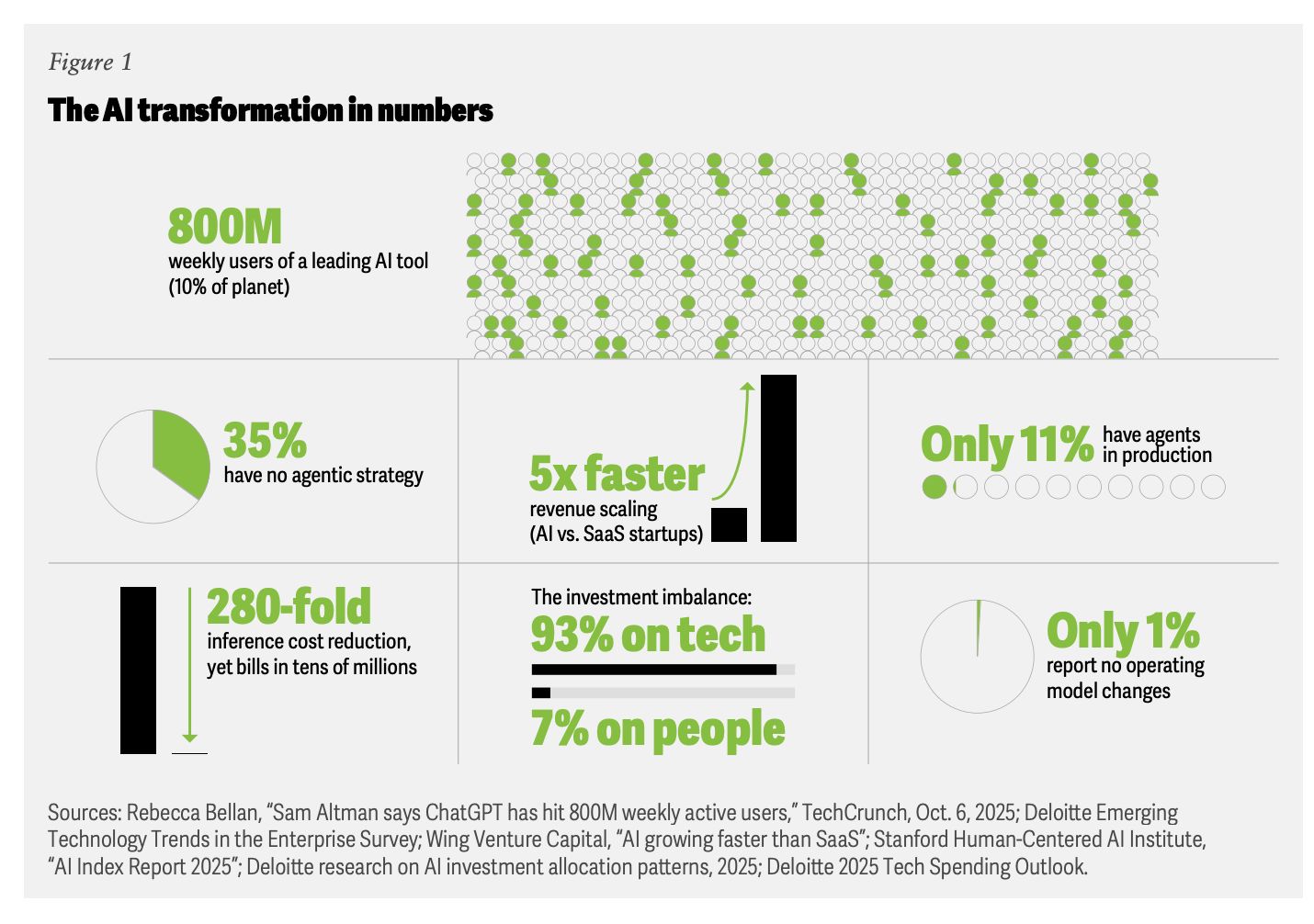

We bought the tools, skipped the people

Image Credits: Fortune

Most companies are spending on AI like it installs itself.

Deloitte CTO Bill Briggs says companies are putting 93% of their AI budgets into technology and only 7% into people, training, and workflow redesign. He shared the stat while discussing Deloitte’s latest Tech Trends report from New York, as firms move from small pilots toward AI at scale.

This rings true because it matches how enterprises always behave under pressure. Buying software feels decisive, while changing jobs, incentives, and habits feels slow and risky, so leaders default to what fits neatly into a budget line.

The cost shows up as falling AI usage, down 15%, and rising “shadow AI,” with 43% of workers bypassing approved tools because the unofficial ones are easier. Trust collapses, even as spending keeps rising.

If the tools are ready but the people are not, the real question is how long companies will keep paying for systems their employees quietly avoid using.

Use the tool, accept the risk

Image Credits: MSN

The lawyers are no longer saying no.

On the Digiday Podcast, IP lawyer Rob Driscoll explains how brands have moved from banning generative AI to requiring it, even as copyright and trademark risks stay unresolved. Disney’s deal with OpenAI, which allows AI-generated Mickey Mouse and Darth Vader content under tight controls, shows how much legal effort it takes to make this workable.

What stands out is how normalized uncertainty has become. Brands know AI output can accidentally copy existing work or weaken trademark protection, and many are proceeding anyway because speed and scale matter more than clean ownership.

The safer players are paying for private, enterprise AI tools so their inputs do not train shared models. Others are accepting that they may not fully own what they publish, as long as it performs and does not trigger a lawsuit.

If using AI is now mandatory, the real decision is how much legal risk a brand is willing to live with when the lights are on and the campaign is live.

Prohuman team

Covers emerging technology, AI models, and the people building the next layer of the internet. |  Founder |

Writes about how new interfaces, reasoning models, and automation are reshaping human work. |  Founder |

Free Guides

Explore our free guides and products to get into AI and master it.

All of them are free to access and would stay free for you.

Feeling generous?

You know someone who loves breakthroughs as much as you do.

Share The Prohuman it’s how smart people stay one update ahead.